As the conversation on A.I.-Generated Art continues, I’m seeing a tidal wave of existential dread, rage and anger from artists who feel threatened by the machine and its outputs.

I’m not going to try to unravel or dismiss those emotions. I think those feelings are valid in many ways. My only problem with a lot of the posts I’m seeing is that feelings cannot be argued in a court of law.

But facts can.

In some cases, people are reacting emotionally to bad or incomplete information. If we hope to win any meaningful concessions from the developers of AI Art Generators, or apply limits to their use…everyone absolutely does need to understand how these machines are built.

So let’s dig into it, and try to break things down into plain language and terms that non-experts can easily understand.

What Is Training Data?

In order to mimic the task of translating words into images, the AI has to absorb a huge amount of information. The combined unit of image + words is called a “clip”. It’s probably best to think of it as the digital version of an image cut from catalog or book, complete with the digital caption—like this self-portrait of Vincent Van Gogh, which was released by The Metropolitan Museum of Art in 2017 into the public domain, along with 375,000 other images of priceless art, as part of its new Open Access Policy.

In order to teach a machine to make art, you have to show it a lot of clips. MidJourney and some of its competitors are trained on a massive data set called LAION 5B, which contains not just millions, but billions of images—5.85 billion, to be precise.

These images were obtained by crawling or “scraping” the Internet. The builders of the data set scraped up billions of images from publicly available web pages and their metadata, and then broke the resulting package down into clips to be used for machine learning and other research.

While some portion of this data is clearly in the public domain—MidJourney finds it very easy to imitate the works of Vermeer, Van Gogh, and Renaissance artists like Michangelo and Da Vinci—a sizable majority of it is not. The AI has no problem recognizing the names and producing the likeness of popular characters and actors/actresses in the modern era, for example, or copying the styles of most popular artists of the 20th and 21st centuries, whose works are still under copyright.

How Does an AI Image Generator Use Its Training Data?

Every Artificial Intelligence, no matter how mundane its task, is built to somehow mimic or model human intelligence. We train a machine to think like a human being so that it can behave like a human being, and help human beings. Ultimately we want our AI’s to be able to assist a person the way that another human being would, if they had the time, training, opportunity and motivation to do so.

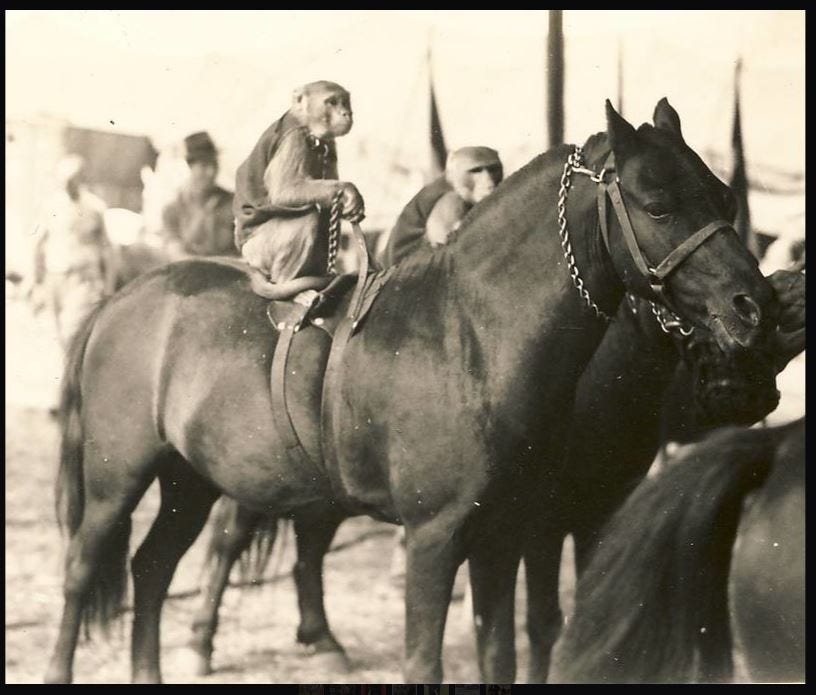

If you have ever been the teacher or babysitter of a small child who wanted you to draw them pictures, you know the task that MidJourney performs. “Draw me a a horse, please!” the kid says. “A green horse, with a monkey on his back!”

Dutifully you pick up the crayon and draw your best approximation of a horse, based on your memory of the hundreds or thousands of real horses, photos of horses, etchings and sculptures of horses, toy horses, carousel horses, cartoon horses, and cave paintings of horses that your brain has absorbed throughout your life. Facing that blank sheet of paper, your brain reaches for its working concept of “horse” and tries to spit back out again, based on your lifetime of experience with correctly labeled visual data.

The purpose of Training Data for an AI like MidJourney is to reproduce that level of human consciousness. In order to perform the task, it needs a huge volume of information. Thanks to Training Data, when you tell MidJourney to draw a green horse with a monkey on its back, it will have a knowledge base about horses and monkeys, about what “riding on a horse’s back” looks like, etc..

Unfortunately, it still isn’t as smart as a human being, so when you give it the child’s prompt, it usually spits out something silly, like this.

Yes, it’s terrible. In fairness, my crayon drawing would probably be pretty bad too. But since I’m human, I could probably remember for the length of a single drawing that the monkey and the horse shouldn’t both have hooves, and that monkeys and horses don’t have the same shape of head and face.

Then again, if the kid claps their hands and yells “yay” when I’m finished, or even just giggles wildly at how bad my drawing is…that’s a win for me. The child was briefly entertained, and perhaps even they learned something from the way my horse and monkey picture delighted and/or disappointed them.

That said, if our game was to continue, the kid might look at my drawing and say, “That doesn’t look much like a real monkey. Plus the monkey should be purple. Please try again.”

At this point, if I was really messing with the kid (or wanting to teach them a valuable lesson about clear communication, or the importance of learning to draw your own monkeys), I might try to draw something as whacky as this.

“That’s not a monkey!” the kid would yell when I was finished. “That’s a horse-man! And that’s not how a monkey rides on a horsie’s back! I saw them at the circus, it was totally different!”

“Ooooh,” I might say sagely. “A circus performer! Yes, you’re right, circus monkeys are very different.” And then, because I’m officially The Worst, I might draw something like this.

For those who immediately rush to point out that using an AI is definitely not at all like painting, drawing or photography, or even scribbling with a child’s crayon—I agree! I agree very strongly. This medium is something entirely different, and entirely new. Elke Reinhuber, in a conference paper for the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, dubbed it “Synthography”, and I suspect that this is the label that will stick in the future. But right now, what you call it and whether the tool can make “real art” is beside the point.

This little story about the kid who wants to see a horse and a monkey illustrates the relationship between the User and the Machine. But inside the AI, there is third entity. In addition to the human User who prompts the image, and the Creative part of the machine which generates the image, there is a silent Critic inside which says, “Nope—wrong. You fail. Don’t show that image to the User at all”.

This combination of Creative and Critical is the warring heart of the image synthesis process. At the core of these machines, there is a Generative Adversarial Network. And fighting its outputs under current copyright laws is going to be very, very difficult.

A Completely Simplistic Introduction to GAN’s

If you already have some knowledge of computer programming, machine learning and Artificial Intelligence, I highly recommend that you skip this section of my essay and turn instead to this article by Jason Brownlee: “A Gentle Introduction to Generative Adversarial Networks (GANs)”, which was posted to MachineLearningMastery.com in 2019.

I read it, and it helped me to understand how the current wonders of AI Art Generation are being performed. The following is a bare bones summary of what I learned, in layman’s terms, and applied to AI Image Generators. (I hasten to add, of course, that any errors I make are entirely my own.)

Here goes.

In order to train an image generator like MidJourney, you need to feed it a huge amount of data. Human Users are going to hit it with a lot of ideas, subjects, and image parameters—with 5.85 billion images in its current Training Data set, a machine like MidJourney still struggles to understand what they want to see, and deliver it reliably, a lot of the time.

So the AI absorbs whatever training data you’re able to give it, to simply understand the parameters of its task. But when it comes to the second phase of its operation, where it has to actually “do the thing”, an AI like MidJourney splits into two personalities. Among professional AI developers, these two sides are called the Generator and the Discriminator, but for purposes of this discussion I think it’s more useful to label them the The Artist and The Critic.

Both sides of the AI’s brain have full access to Training Data that was used to teach the AI, but they use it in different ways.

The Artist studies the Training Data for patterns. When it wants to make art, it makes a prediction based on previous patterns in art and generates a new image based on what it has learned previously. The AI’s “thought process” might be translated like this: “Okay! I see all these thousands of images are labeled ‘horse’, so if I make a new image of something that has a similar pattern—four legs, a head shaped roughly like this, and proportions like that, and some hair sticking out here and there, it will probably be easily recognized by humans as a horse.”

The Critic uses the Training Data differently. When the Artist makes an image, the Critic checks to make sure the Artist isn’t cheating; it checks the 5.85 billion images in the Training Data to be sure that the new image isn’t already part of that set.

To reiterate: the Generative side of the AI isn’t allowed to just spit out something that already exists in the Training Data. If it tries, the Critic will reject that image.

Whatever image is finally output by the machine must be something new and different, which has not existed before. Which means that even if I wanted it to, and even if it had this image in its training data, MidJourney could never steal this photo and claim ownership of it…the way a real human artist can.

This technical break-down of the image generation process may seem boring, but I think it matters because I see a lot of opponents of AI Art saying a lot of things that simply don’t make sense.

AI’s do not, and cannot, “just copy” some human artist’s work.

AI’s also do not assemble collages of pre-existing work. The generative model is based on patterns, not on cutting and pasting elements out of Training Data. If you see a squiggle in the corner of an image, that is not “the mangled signature of a human being”. It’s an artifact of the AI’s pattern recognition. A lot of human-made images have a squiggle down in the corner; the AI wants to make art for humans, so it sometimes thinks that “Art” requires a squiggle.

I’m explaining all this not because I am “against real human artists”, or don’t care about them. I think it’s important to have this information, because the way these AI’s are built is going to make it extremely difficult to argue in court that the output images of MidJourney and other generative AI’s are “stolen” from human beings.

To be clear: copyright law already has specific protections in place for what we call “transformative works”. The Fair Use doctrine states that it is not illegal to create work that is derivative. Even incorporating some easily recognized aspects of an original work into a derivative piece is considered legal if you are doing so for a reason—to comment on it, to satirize or parody it, etc..

What this means in practical terms is that your work can be wildly derivative of someone else’s ground-breaking, original vision…and still get a pass. These are the laws that protect most of the derivative crap we see in the world around us.

It’s the reason that both Brave New World and 1984 are considered masterpieces of original science fiction rather than plagiarized garbage stolen from the original author of the science fiction novel WE, Yevgeny Zamyatin. Despite the fact that there is ample proof that both Orwell and Huxley read the original novel in its French translation, long before writing their variant takes on its plot and conflicts.

The same fair use doctrines protect the entire corpus of songs performed by Weird Al Yankovich. Anyone can tell that Weird Al’s tracks are musically identical to the hit songs that he mimics. (In fact, I would argue that the resemblance is key to their humorous impact.) He often imitates the vocal stylings of the performers of the original song as well (which are also not protected by law). By changing the lyrics and introducing the silliness that he does, Weird Al transforms the original work into something new, and furthermore, something that is original to him. Copyright laws as they currently exist protect his right to do all this, under the Fair Use doctrine.

It’s important to note that Weird Al is not required to seek permission from the original artist, before he does all this. Nor is he required to share any profits with them. He can and does sue corporations for millions of dollars when they rip him off, and he would not need to pay any of those dollars to the original rights holder if he was not so inclined.

But HE DOES seek permission from the original artist! And he DOES share the profits from his work! Not because the law requires it, but because his conscience demands it. And because in general, life in the arts is about relationships. Every Weird Al contract is negotiated on an individual basis, but so far as I know, he doesn’t parody a song when he can’t work something out with the original composer.

This behavior is called self-regulation. And frankly, I would like to see a hell of a lot more of it in Big Tech, especially in teams working on AI’s.

LAION 5-B and the Problem of Ethical Training Data

The Training Data set that an effective AI Art Generator needs—5.85 billion images—may not be possible to build or obtain by ethical means, at least at the moment. Right now, the AI devs and content scrapers are pushing for an “opt-out” model, which places the burden of searching the data set and finding any image that violates your copyright and privacy on the victim, not the perpetrator.

But this is not how consent works, in my opinion. Consent is the presence of “yes”, not the absence of “no”. Scraping up copyrighted or privacy-invasive images from the Internet, in ways that might impact millions or billions of people who cannot give their ACTIVE consent to your intended use for them, is dubious at best.

This being said…how do you get this much data with a guarantee that it is all perfectly clean? If the only way to do this is to scrape billions of images from the web, some of them may be in the public domain, but only be a fraction of the total. And the data set is so huge that it seems impossible to curate or restrict it enough that it could be used ethically.

To check the copyright of every clip in the LAION 5B data set, even if you only spent one minute determining the status of each image, would take 11,130 years.

And even if you started with a much smaller set like Laion Aesthetics, which contains a mere 120 million images, investigating one minute per image would take you 228 years.

At this sitting, I don’t have a final answer or a quick fix to make the problem of AI Art Generators go away. But I do believe that Training Data might be the Achilles’ heel of MidJourney and many of its competitors, and I do feel it’s the weak point where targeted legal and regulatory attacks on the tech and its developers are most likely to succeed.

Some variation of the Fair Use laws would probably always protect that OUTPUT of an AI Art Generator. Fair Use laws are the reason that no one bothers trying to sue the authors of fan fiction and the creators of fan art, too—even if they do sometimes earn a substantial income through their pillaging. Taking someone to court over copyright infringement costs money on both sides, and even if the person who wrote a derivative work could fill a swimming pool with thousand-dollar bills from sales of their “art”, you probably couldn’t win a dime suing them.

Current laws don’t cover Training Data as a form of exploitation because this form of exploitation didn’t exist, when they were written. But if the AI Art Generator made with the scraped data can be proven to be a threat to the livelihood of any artist in particular, then images fed as Training Data into an AI Art Generator would not be protected by copyright law.

Destroying the livelihood of the original creator cannot by definition be Fair Use.

My Personal Opinion

Is it immoral to scrape public data and use it train an AI?

People keep asking me this question, and I keep having to tell them the ugly truth: I am not 100% sure.

I have three reasons for my uncertainty.

One, I don’t really buy into the arguments that synthography isn’t art.

Two, because I’ve yet to see compelling evidence that AI-Generated Art trained on the work of a living artist is a threat to that artist’s livelihood or estate.

And three, because I’m not sure that what the AI and its developers are doing with Training Data is morally different from what every professional artist I’ve ever met has done, when they used Google to find a reference image—or bought books full of someone else’s art to study, so they could sharpen their mastery of a certain subject or style.

Nonetheless, my gut instinct is telling me that we ARE entering dangerous waters. No matter how you look at it, this tech has the potential to break society. And it would not be the first time that a disruptive tech has broken society.

More on that later, in my letter to Michael Spencer—a reply to his post below.

Another fantastic read. If you ever want to break down an A.I. topic as a guest post on my A.I. Newsletter just let me know and if you did so, feel free to link back to articles like these!